Postgresql Incremental Backup by Charmi Chavda on May 28, 2020 1,468 views

Taking backups is essential nowadays. PostgreSQL provides three methods [SQL dump, File System Level Backup, Continuous Archiving(Incremental)] of providing backup. One can use any of these methods as per the need. In this article, we will learn to take Incremental backup on PostgreSQL. To understand Incremental Backup we first need to understand some basic terms related to it that is explained below:

What is WAL(Write Ahead Logging) file?

It is a file that stores operations that need to be done to the database. Changes in the database [in the form of DDL & DML queries] are first written into the memory shared buffer pool. Commit/Revert will be stored in the disk memory [WAL] file. Post that, it will be written into the disk.

Incremental backup vs Traditional backup methods

| SQL Dump | Incremental | File System Level | |

| In which form backup is stored? | DDL & DML Queries | WAL File | SQL Commands |

| What Information will be stored in backup? | Full database backup | Only changes will be part of backup | File that stores data |

| Restore backup at particular time? | No | Yes | No |

Tip: Incremental changes are backed up [WAL files] files and it justifies the name “Incremental Backup”.

Since we now have a basic idea of Incremental backup, let us see how it can be executed.

Steps of taking Incremental Backup

Below postgresSQL 9.5 and ubuntu is used to perform backup & restore.

# Create backup folder for WAL files

sudo -u postgres mkdir /var/lib/postgresql/archive

# Edit configurations files. sudo gedit

/etc/postgresql/9.5/main/postgresql.conf

wal_level = hot_standby (replica for >9.5)

archive_mode = on

max_wal_senders = 4

archive_command = 'test ! -f /var/lib/postgresql/archive/%f && cp %p /var/lib/postgresql/archive/%f'

cat << EOF | sudo tee -a /etc/postgresql/9.5/main/pg_hba.conf

local replication postgres peer

EOF

# Restart postgres

sudo pg_ctlcluster 9.5 main restart

# Create database and add data for testing backup.

sudo su - postgres

psql -c "create database employee;"

psql employee -c " create table employee_info (

id integer,

title character varying(100),

first_name character varying(100),

middle_name character varying(100),

last_name character varying(100),

created_at timestamp without time zone,

employement_type character varying(100)

);

insert into employee_info (id, title, first_name, middle_name, last_name, created_at, employement_type) values

(100, 'Miss', 'Chandani', 'Dahiyabhai', 'Dodia', '2018-01-01', 'Permanent'),

(101, 'Miss', 'Disha', 'Dahiyabhai', 'Dodia', now(), 'Permanent');"

# Take WAL file backup

psql -c "select pg_switch_xlog();" [Note: for postgres version >= 10 use pg_switch_wal() ]

# Take basebackuppg_basebackup -Ft -X fetch -D - | gzip > /var/lib/postgresql/db_backup.tar.gz

# changes in database after backup

psql employee -c " insert into employee_info (id, title, first_name, middle_name, last_name, created_at, employement_type) values

(102, 'Mr’, Chaman, 'Dahiyabhai', 'Dodia', '2018-01-01', 'Permanent'),

(103, 'Mr', 'Daya', 'Dahiyabhai', 'Dodia', now(), 'Permanent');"

wal_level : It determines how much information will be written in a WAL file.

archive_mode: On will make incremental backup enable.

archive_command : command to take a backup of WAL files.

max_wal_senders : Specifies the maximum number of concurrent connections from standby servers or streaming base backup clients (i.e., the maximum number of simultaneously running WAL sender processes). The default is zero, meaning replication is disabled.

Need to add replication in a different line for backup in pg_hba.

Restore Backup

# Stop postgres and delete all data

sudo systemctl stop postgresql@9.5-main

rm /var/lib/postgresql/9.5/main/* -r

ls /var/lib/postgresql/9.5/main/

# Restore postgres from backup

tar xvfz /var/lib/postgresql/db_backup.tar.gz -C /var/lib/postgresql/9.5/main/

nano /var/lib/postgresql/9.5/main/recovery.conf

restore_command = 'cp /var/lib/postgresql/archive/%f %p'

#recovery_target_time = specify time upto which backup need to be restored

# Restart postgres

sudo systemctl start postgresql@9.5-main

FAQ

1. Can incremental backup be taken from the individual database?

No. Incremental Backup is taken from the database cluster.

2. Can multiple recoveries be done on one backup?

Yes, it can be.

3. Suppose today is 01/12/2019 and base backup is taken on 01-September-2019 and recovery needs to be done up to 01-October-2019. After recovery up to 01-October-2019 should another recovery can be done up to 15-October-2019? Is it possible?

Yes, it is. Thanks to the timeline concept.

What is the timeline?

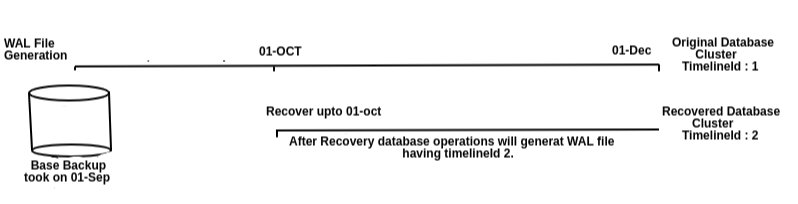

Timeline is used to differentiate originals from a recovered backup cluster. It has mainly two component timelineId and .history file.

TimelineId: The first 8 characters of WAL filename is timelineId. For initial backup, timelineid is 1 and it will increase with every recovery done from the database cluster.

History file: It contains Information about timeline branching. Which timeline is created from which timeline and when.

What is stored in the .history file of the timeline?

1 0/19000000 before 2019-10-01 05:30:00+05:30

4 0/1F000000 before 2019-12-02 05:30:00+05:30

Above is an example content of the history file. It contains 3

- from which timeline it recovered

- location of WAL-segment where the switch happened

- time of switch happen[Narration]

How will the restore work?

First, we need to restore the base backup and then WAL files need to be replayed to restore a backup.

For example, referring to figure 1.0 Let’s say base backup is taken on 01-September and on 01-December we need to restore the database cluster from backup.

1st time restore: Restore needs to be done up to 01-October. First base backup will be restored. Then WAL files will start restoring up to time mentioned in recovery.conf file [01-oct specific time]. After that, all WAL files are skipped. Then the new changes in the database will generate WAL file on timeline 2.

2nd time restore: If on 02-December database crashes then, then first base backup needs to be restored and timelineid [i.e. 2 in this case] parameter must be mentioned in recovery.conf file. So WAL files from timelineid 1 will start restoring up to 1st October. After that timelineId 2 WAL files will start restoring up to the time mentioned in recovery.conf.

How Postgres will decide which WAL segments of which timeline to replay/restore in the restore process?

It will be decided by the information stored in the .history file.

Refer to data stored in .history file, after base backup restored WAL files of timeline 1 up to 01 October 5:30 will play then WAL files of timeline 2 up to 02-December 5:30 will restore.

On 1-December-2019 database cluster is restored up to 1-October-2019 and on 3-December-2019 can data be merged from 15-October-2019 and data of 2-December-2019?

No. It will create a data inconsistency problem. [Let’s say in a table employee on 02-October a record is deleted with primary key 1099 and after restore on 01-December-2019 it will be in the database and now on 2nd December some changes are done on PK 1099. Now if we restore data up to 15-December-2019 then PK 1099 is deleted and 2-December will try to change the record that doesn’t exist.]

Is it possible to take incremental backup on a remote machine?

Yes [ command to use : pg_basebackup -X fetch -Ft -z -h ipaddress -U username -D directory-name ]

What if recovery_target_time is not specified in the recovery.conf file?

It will help in restoring the database cluster by storing details like up to which time database needs to be restored and from which timeline restore needs to process etc. After recovery, the filename will change from recovery.conf to recovery.done by the server.

References:

- http://www.interdb.jp/pg/

- https://www.postgresql.org/docs/9.5/

- https://postgresqlco.nf/en/doc/param/

- https://www.scalingpostgres.com/tutorials/postgresql-backup-point-in-time-recovery/